Hi, I am Zihang. I am a researcher at UC Berkeley and International Computer Science Institute, advised by Prof. Michael Mahoney. I also have the privilege to work closely with Prof. Yaoqing Yang from Dartmouth College. I previously obtained my Master’s degree in EECS at UC Berkeley.

My research focus is to understand and improve the transparency and efficiency of learning models. I am particularly interested in understanding phenomena such as low-rank structures, sparsity, and the geometry of weight matrices in deep learning models, with inspirations from high-dimensional statistics, random matrix theory and randomized linear algebra. I also use these techniques in discovering new (numerical) algorithms.

🔥 News

- 2025.06: Started my research engineer position at ICSI, UC Berkeley, to work on numerical algorithm discovery with deep learning.

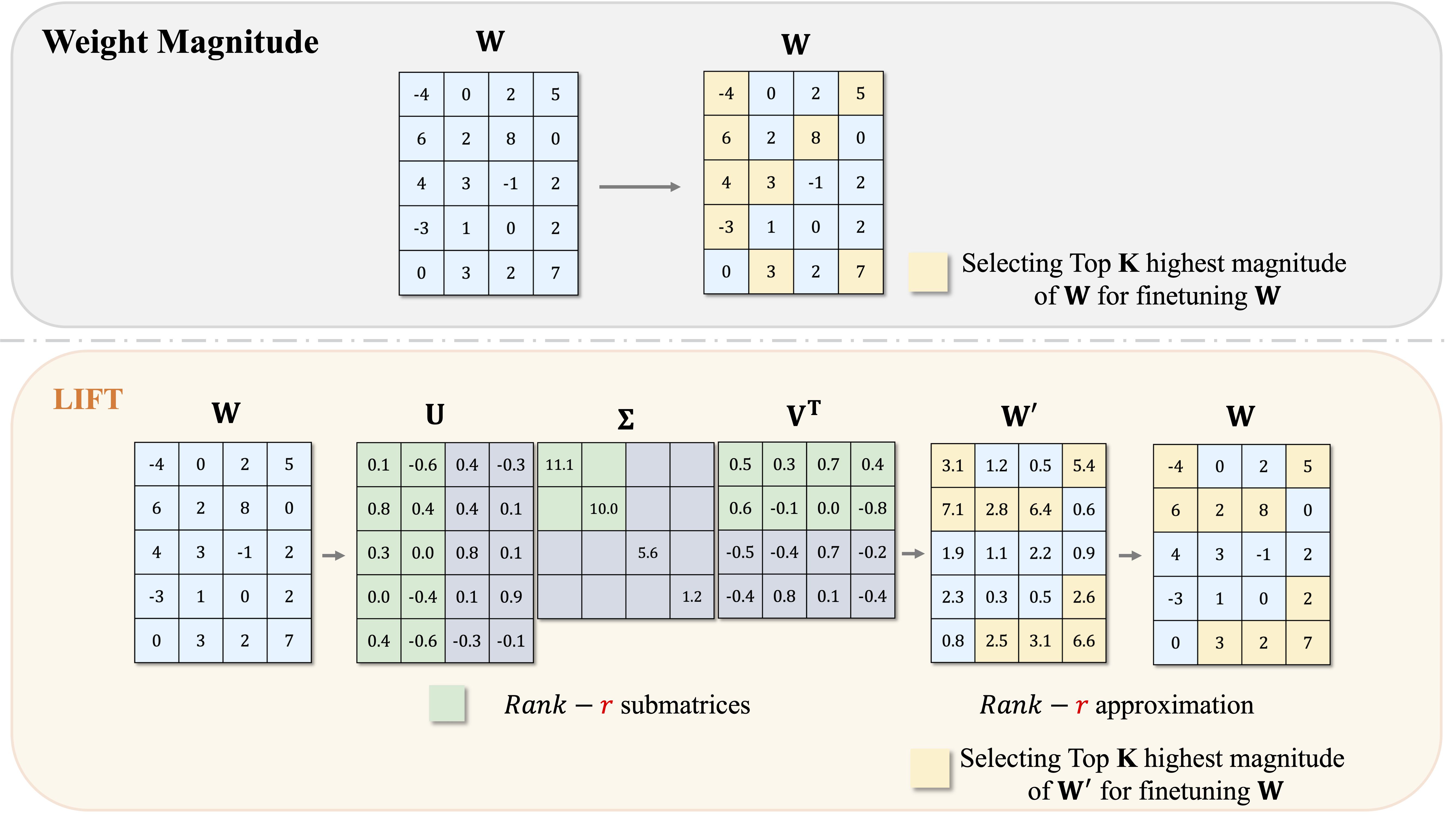

- 2025.05: Our paper “Principal Weights Emerge after Rank Reduction for Reasoning-Focused Supervised Fine-Tuning” has been accepted to ICML 2025.

- 2024.11: Gave a presentation at EMNLP 2024 on foundation model diagnosis, check out the live recording here.

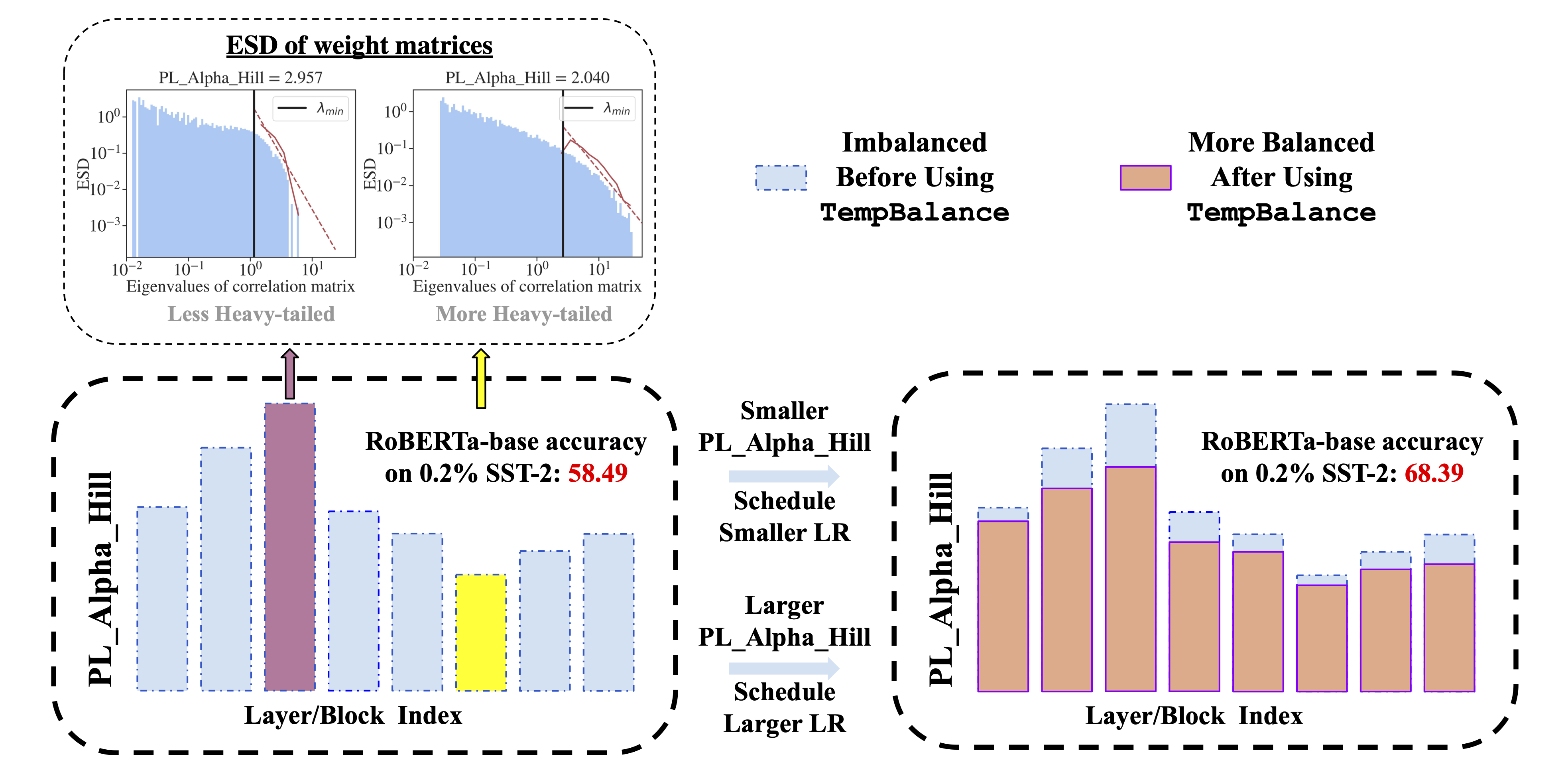

- 2024.09: Excited to share that our work “Model Balancing Helps Low-data Training and Fine-tuning” is accepted by EMNLP 2024 as Oral Presentation.

📝 Publications

Authors within {} are equal contributors.

LIFT the Veil for the Truth: Principal Weights Emerge after Rank Reduction for Reasoning-Focused Supervised Fine-Tuning

Zihang Liu, Tianyu Pang, Oleg Balabanov, Chaoqun Yang, Tianjin Huang, Lu Yin, Yaoqing Yang, Shiwei Liu

ICML 2025

Model Balancing Helps Low-data Training and Fine-tuning

{Zihang Liu*, Yuanzhe Hu*}, Tianyu Pang, Yefan Zhou, Pu Ren, Yaoqing Yang

EMNLP 2024 Oral